Old age brings many challenges, as aging

leads to physical and cognitive decline,

simplest of tasks become demanding.

To address this, Emory Medicine partnered with

Georgia Tech to design technologies that

support independent living among older adults.

My Role

I was the Lead Product Designer focused on designing and testing AI experiences for older adults (60+). It was a 10 month long engagement from August 2020 – May 2021.

The AI assistant was deployed to millions of users using Google Nest and Android devices.

Chapter 1

Discovering the problem

We started by understanding users' context. UX researchers conducted ethnographic research and piloted a study by placing Google Nest devices in couples' homes to observe their natural interactions. I helped with data analysis.

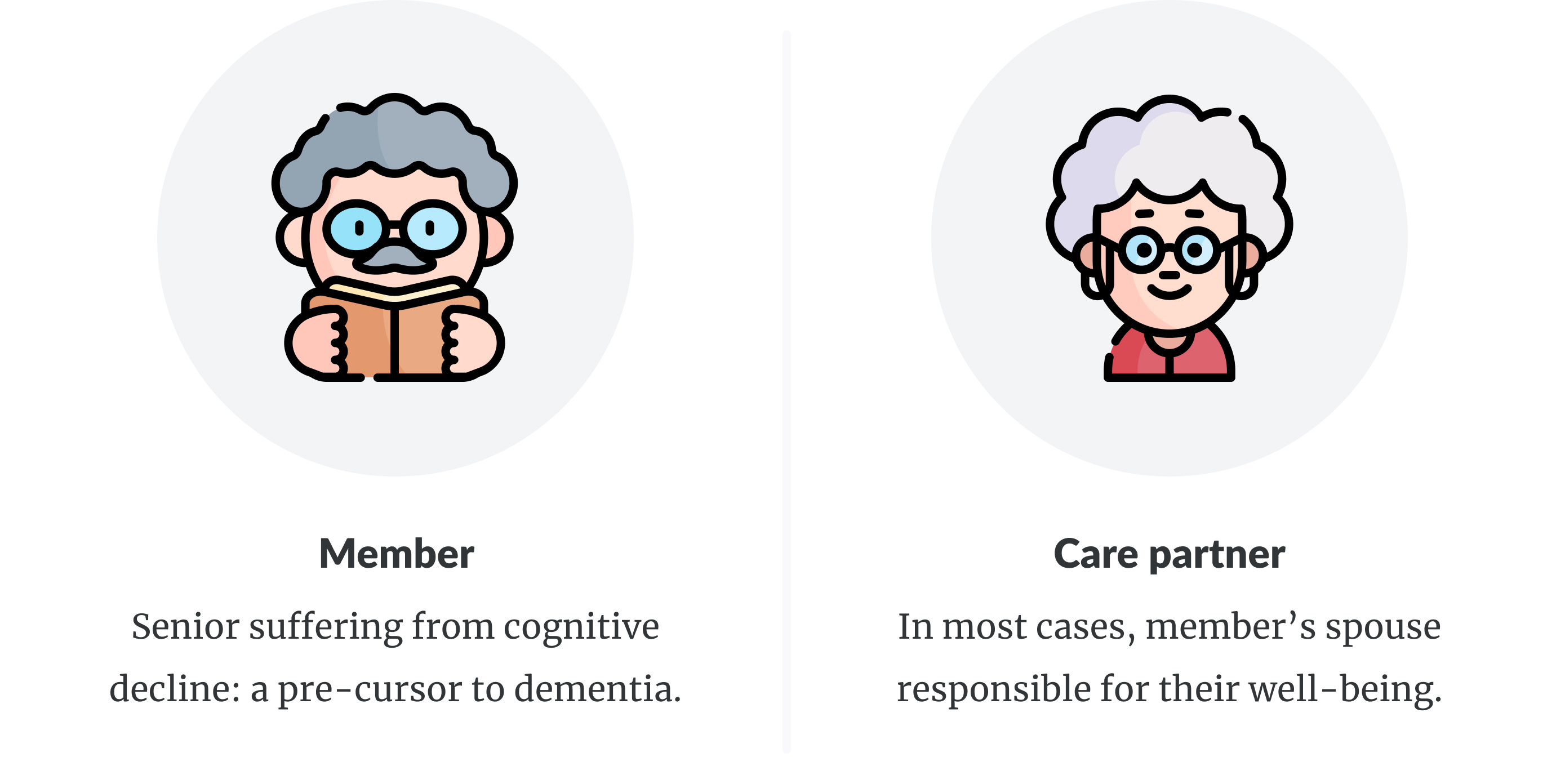

Our users

Our users consisted of an elderly couple, a member (an elder struggling with cognitive decline), and their care partner.

Ethnographic research findings

Couples set alarms for medications in 60% of their interactions.

They also mentioned during interviews that missing critical doses impacted their health.

From the research, we understood that managing medications

was a big pain.

I saw an opportunity to build an AI experience to support them with their

daily medications and aligned with the leadership on this idea.

How might we use conversational AI to support millions of older adults in managing their daily medications?

We used Google Nest as our pilot conversational AI for it's ability to handle long-form prompts.

Chapter 2

Getting deeper insights

To understand a couple's existing medication management

strategies, I collaborated with the UX researcher to conduct

2 focus groups with 50 couples.

Important Note: We conducted the sessions virtually

because of the COVID-19 pandemic. We innovatived new formats to make the sessions engaging.

Understanding ecosystem

I interviewed other care teams to understand a complete ecosystem.

Members' immediate care network often consisted of spouses

and adult children.

The conversational AI was situated in members'

immediate vicinity and formed a part of their primary

care network.

Conversational AI was part of the primary care network

Research Insights

- Unreliable management practices: Couples placed pillboxes near the daily routine objects, e.g stove, so they remember to take their medications.

- AI became a family member: Members thought of voice AI as a real person. They tried to have conversations with it as if its a family member.

- Spouses acted as the gatekeepers: Spouses often felt burdened by the responsibility of checking on their members about medications.

Can conversational AI relieve the care-partners from the responsibility of checking on members about their medications?

Chapter 3

Design goals

I formulated the design goals based on the research.

![]()

Fit seamlessly into existing routine

The AI intervention shouldn't compel the elderly couple to form new habits. It should fit seamlessly into their routine.![]()

Mitigate the risk of double dosing

The AI system should prompt reflection rather than being a command or suggestion.

Accommodate diverse scenarios

Design should be reliable, contextually aware, and take into not just the highest probability scenarios but also variations.Chapter 4

Mapping user expectations

To map different daily scenarios I partnered with the UX researcher to conduct a participatory design activity with 35 couples. We storyboarded AI as "Rosie-the-robot" and asked couples to help us write the story.

Participatory design to understand user expectations

Expectations From Conversational AI

- Persist reminder if busy:

Users mentioned they would want AI

to persistently check in with them instead of just triggering it once.

- Multi-platform design:

Users also mentioned they want AI to remind them on their phone if they are not around

at the time of medications.

- Adding delight on task completion:

Users mentioned they would be delighted AI praises them if they already

took the medications before it reminded them.

Chapter 5

Designing AI interactions, my process

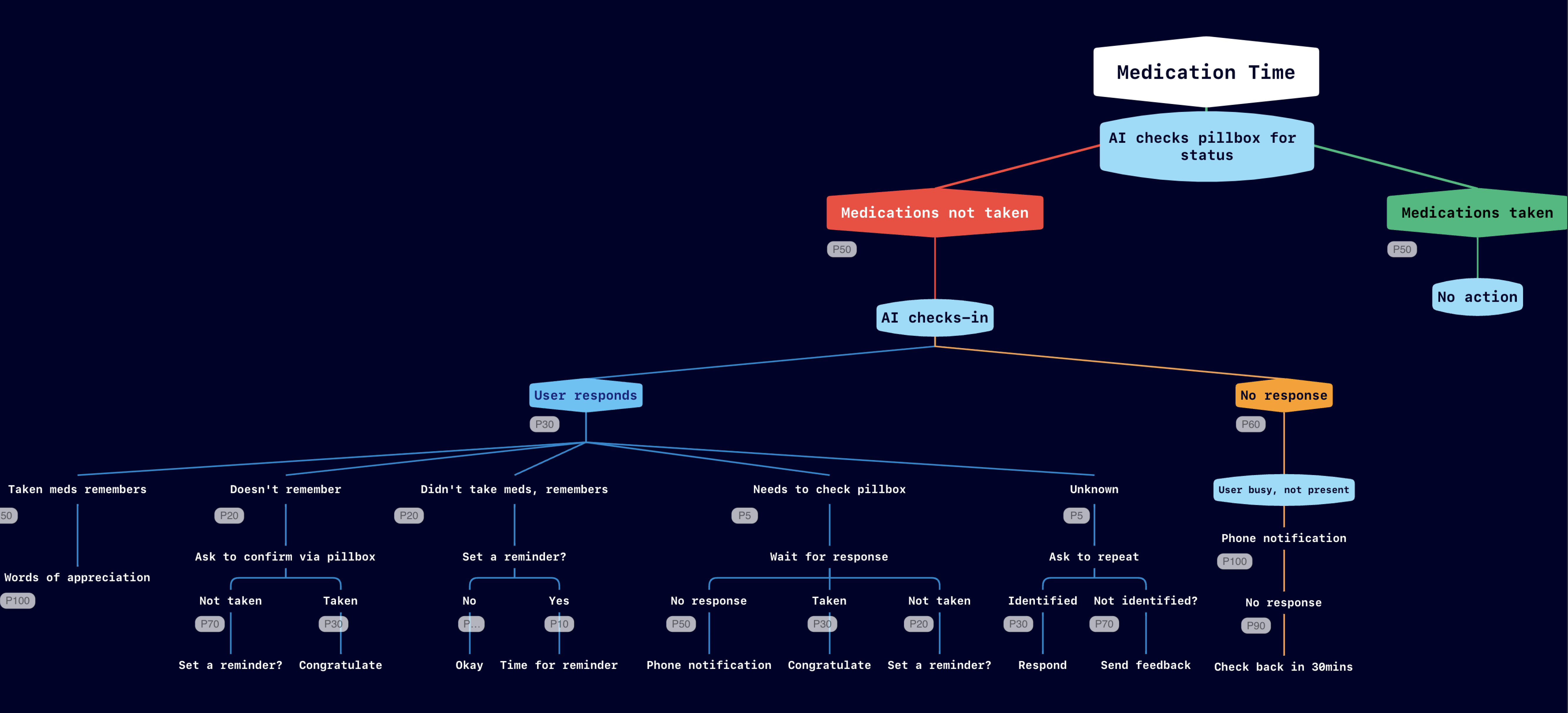

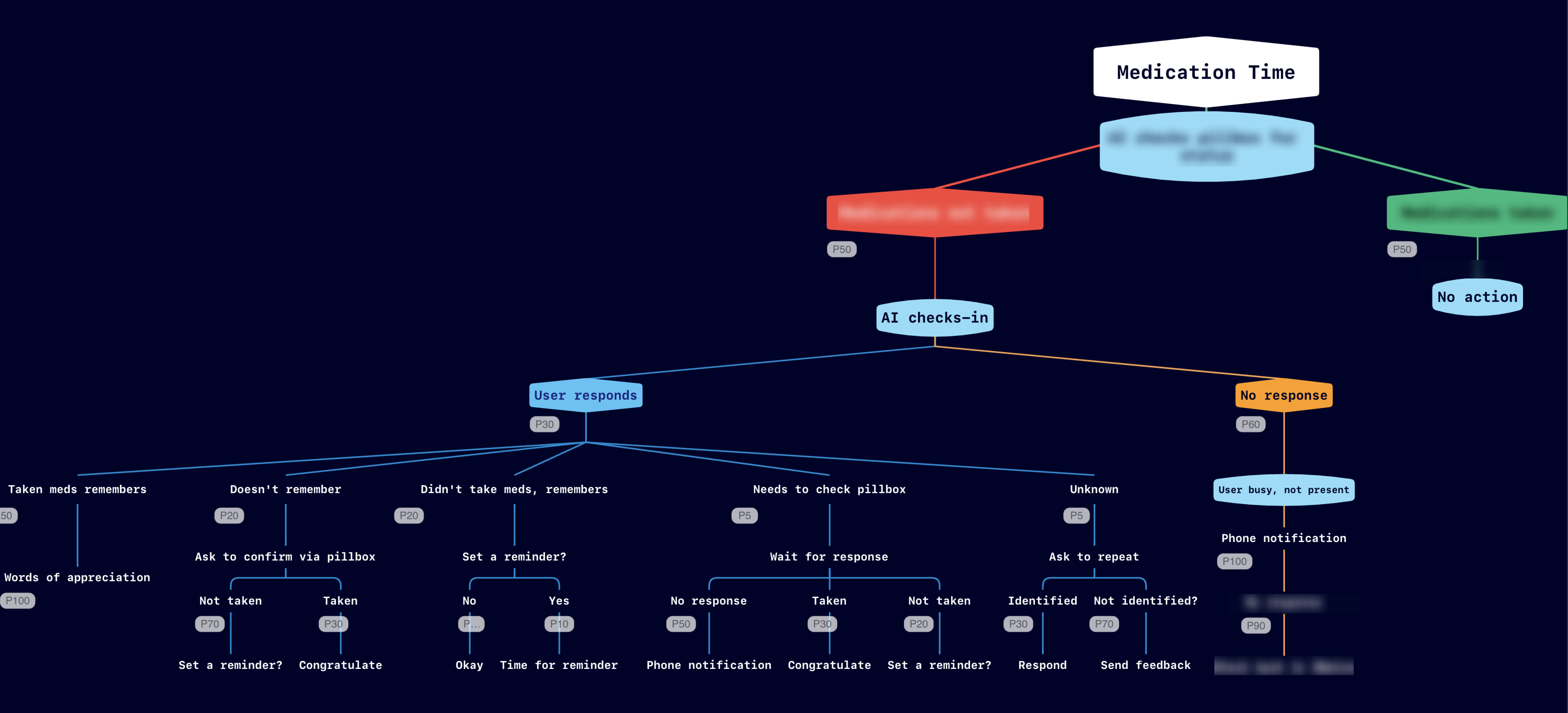

I started by mapping down different scenarios and their probabilities in a map. (image below)

Complete scenario map (Click to expand)

Technical limitations that impacted design

Google Nest Hub is a protected hardware which limited our ability to customize the device. Some limitations that impacted design:

- Integration with third-party pillboxes not supported:

Ideally, the AI assistant is kicked into action only when a

user forgets to take pills. But since Google Nest

didn't support integrations with third-party pillboxes, it limited

our ability to make contextual reminders.

- No presence sensing:

We wanted to control the volume of AI assistant based on presence.

The closer someone is, the smaller the volume, the farther, the louder.

However, we could not do this due to a lack of

presence-sensing.

- Ability to snooze:

We could only trigger the AI assistant by time.

Thus, it restricted our ability to add a "snooze"

feature to the design.

Subset of scenarios that we implemented

As multi-modal AI design was still a novel technology,

I created methods to design a holistic voice

and visual experience.

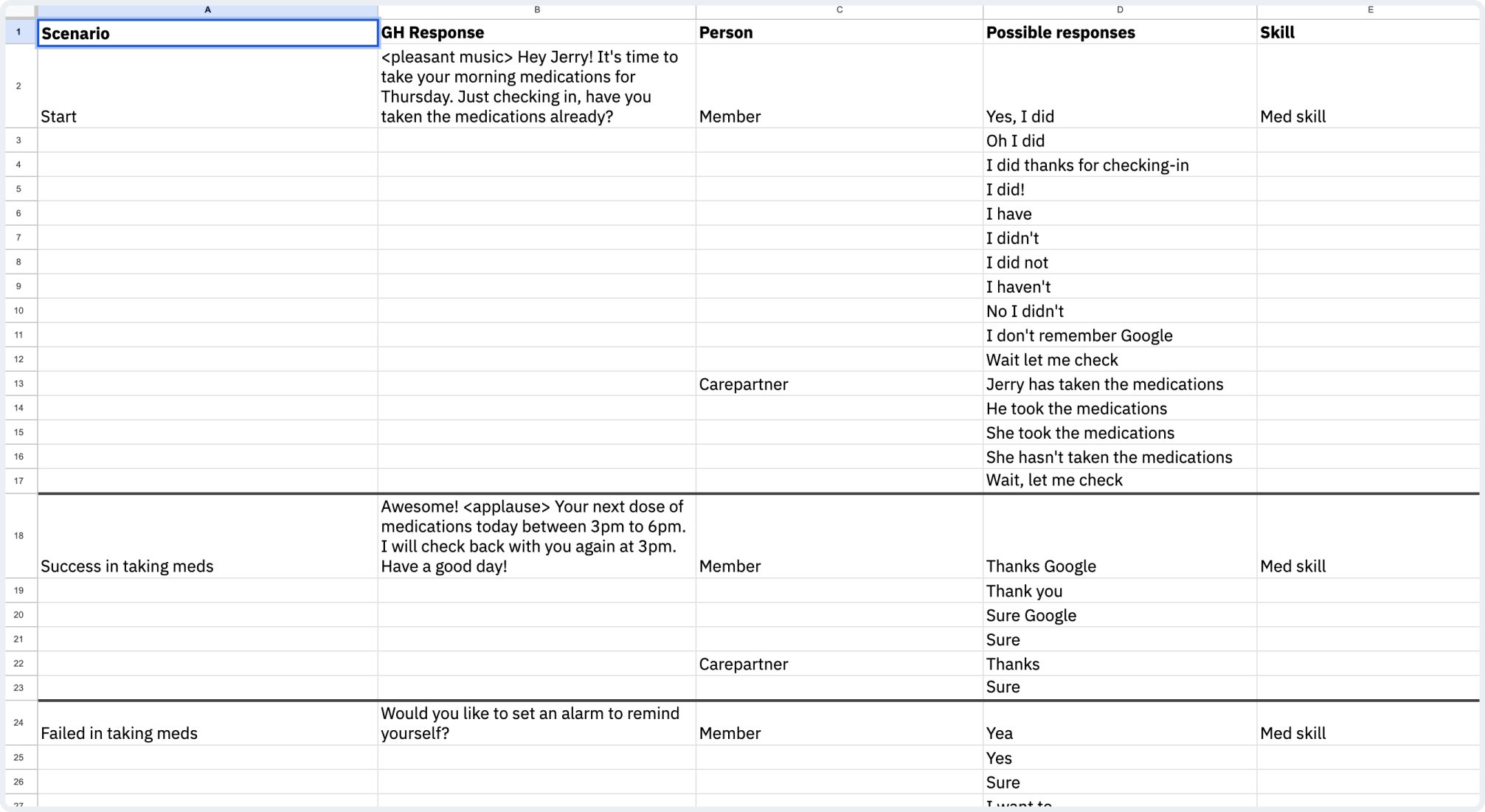

To accommodate various scenarios, I created a response chart with

possible interactions. I also built a spreadsheet with

possible semantic variations for responses to train the ML model.

Spreadsheet with possible semantic variations for each scenario

Introducing Med Companion

Your personal AI assistant to help with medication management.

Set your personalized medication schedule in the app. And the assistant automatically reminds you to take your medications at the right time.

Chapter 6

Final Interaction Models

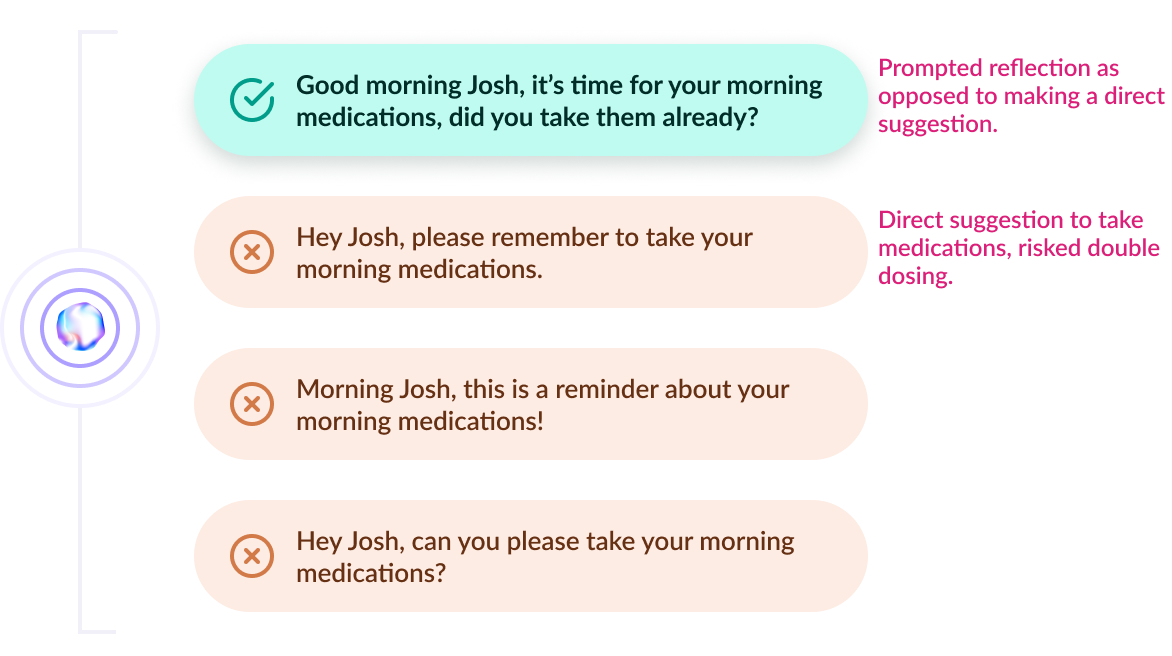

Designing for safety

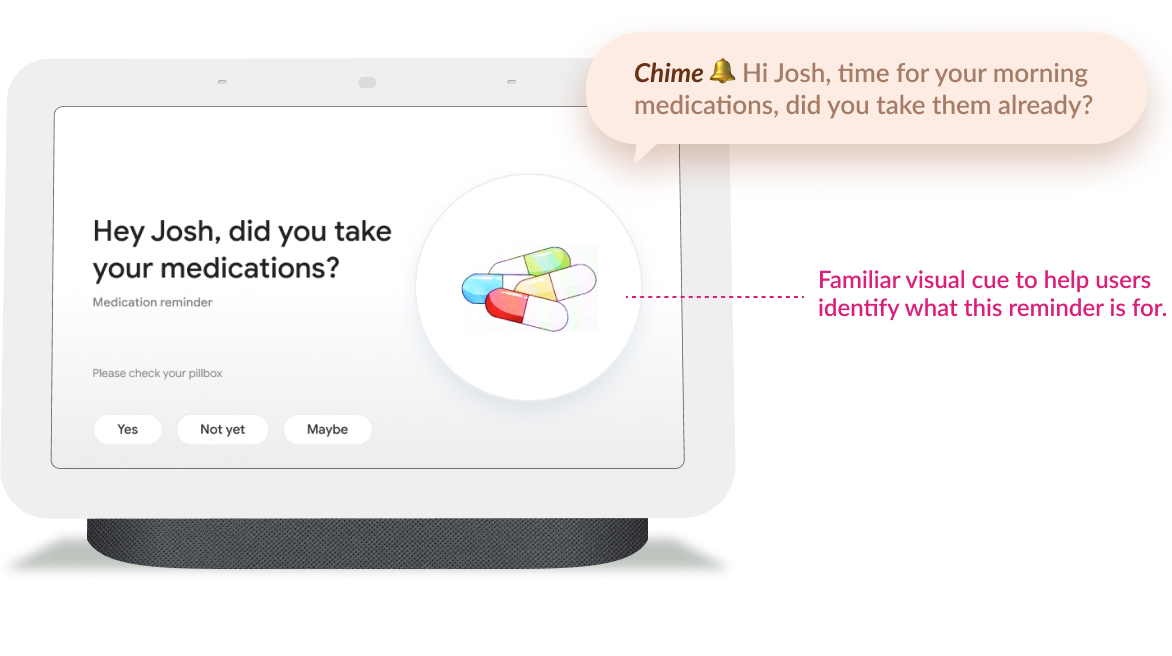

I stuctured the prompt such that it was a check-in rather than a reminder. This was critical to avoid double dosing. The Med companion's language avoided any direct suggestion.

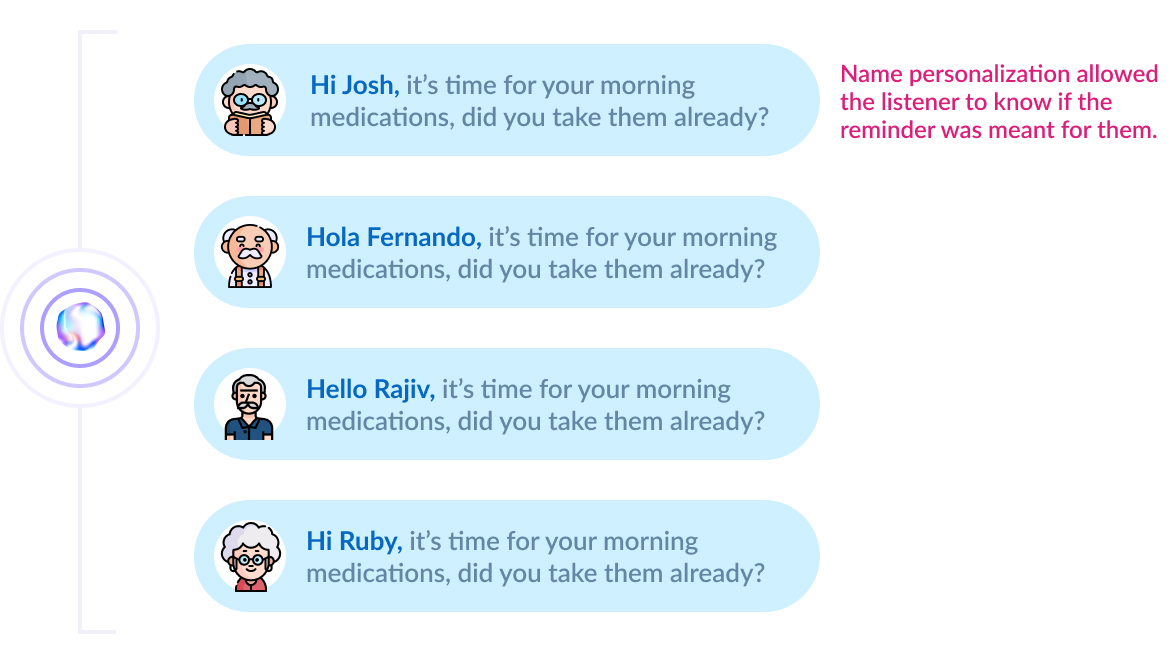

Name personalization for trust

Med Companion addressed each user by their name. This provided necessary context so users can be more receptive to the assistant when it addressed them.

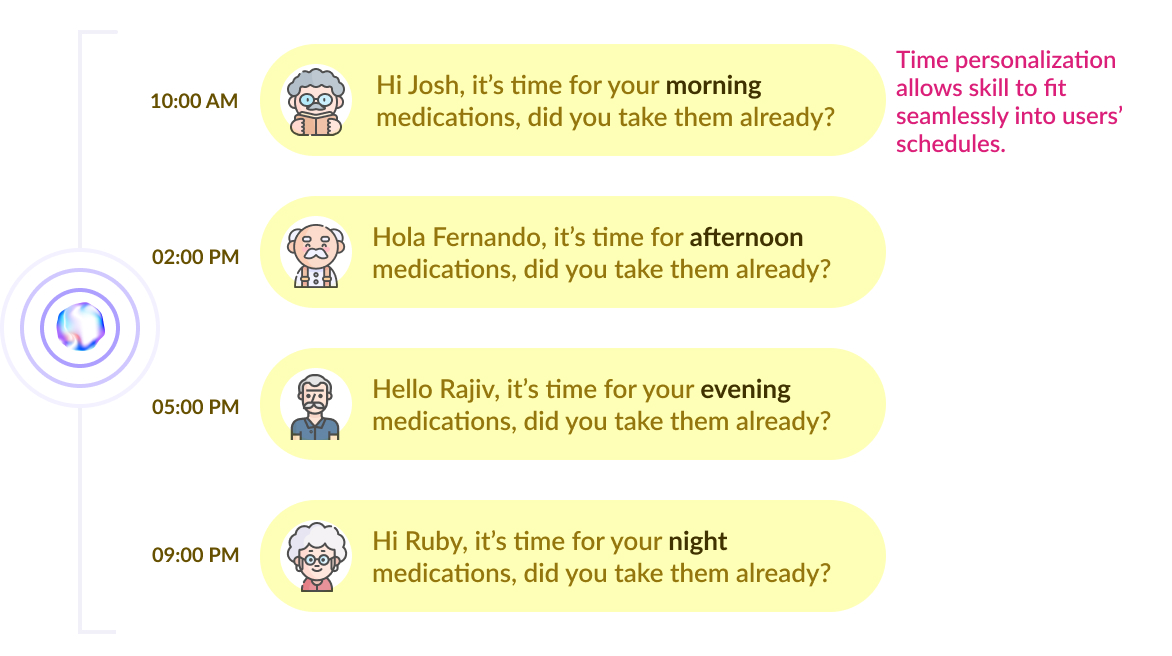

Time personalization for seamless experience

Med Companion could be adjusted based on each users medication schedule. They could personalize it to match their medication timings thereby allowing it to seamlessly fit into their schedule.

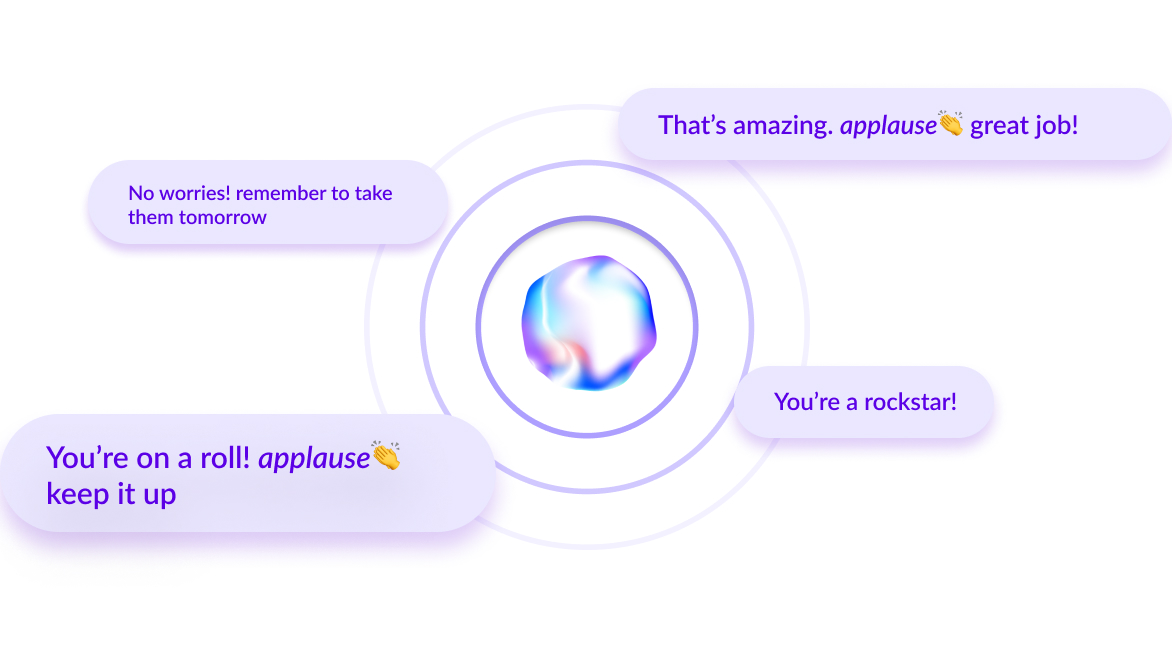

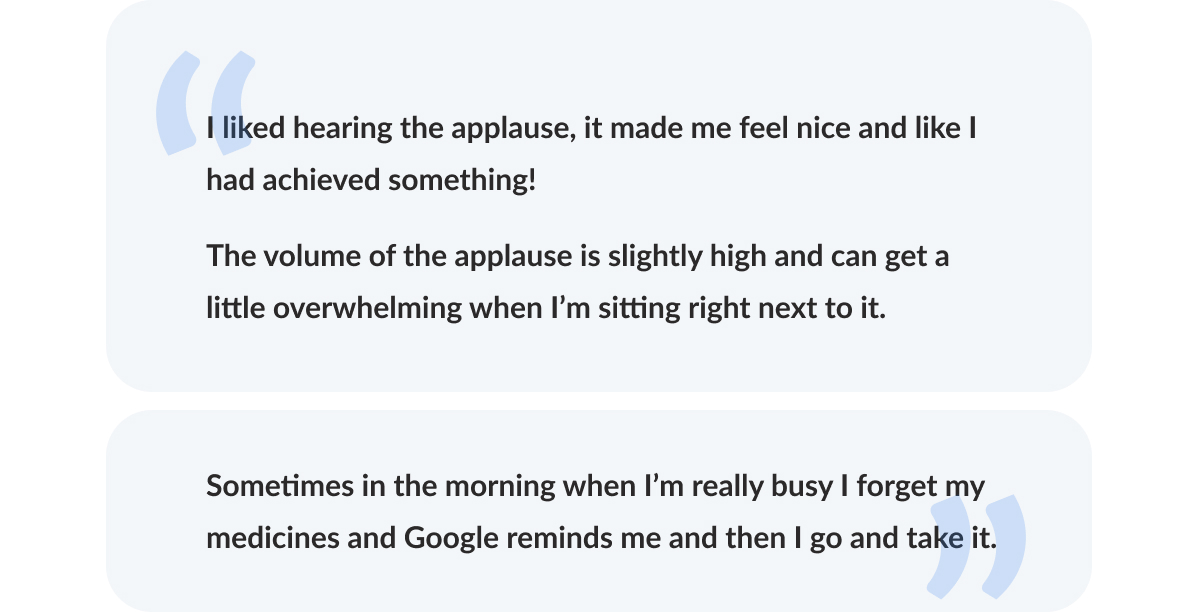

Positive reinforcement to build sustainable habits

Med Companion supported users to build sustainable habits. It applauded them when they took their meds before the trigger, and offered support if they forgot.

Designing for familiarity

As Med Companion was a multi-modal skill, the visual interface was designed with supporting visuals and familiar chime to make it easily recognizable.

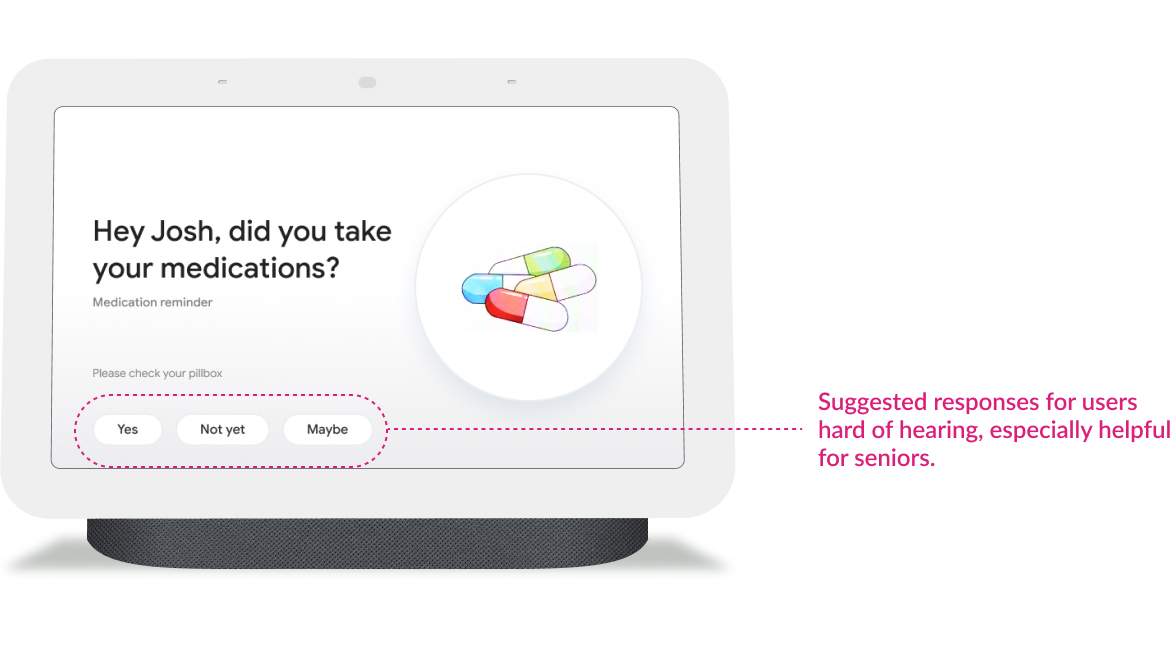

Suggestion prompts for accessibility

I included suggestion prompts at each stage to make Med Companion accessible to those hard of hearing.

Med companion in action

Demo of Med Companion

Chapter 7

Test Results

After three weeks of deployment, I conducted semi-structured interviews to understand the skill performance. Feedback was positive.

Qualitative Feedback

- Conversational AI kept users from missing medications:

During interviews, users recalled several instances where they took medications when AI reminded them.

It was also reduced care-partners burden to a certain extent.

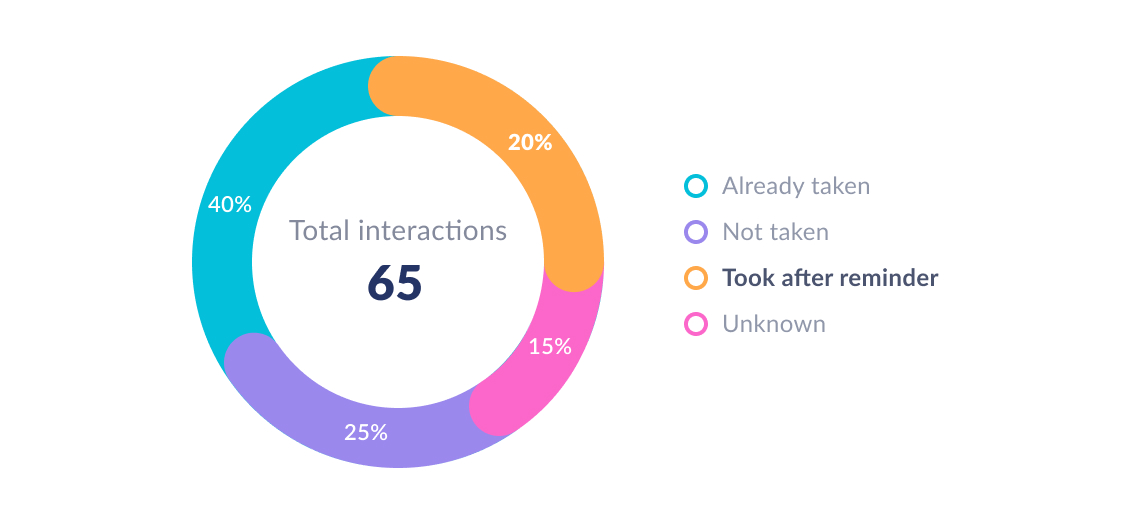

Quantitative Feedback

I analyzed interaction logs and found that in 20% of the cases, users had taken the medications after conversational AI had reminded them.

Missed medications had dropped 20% after the skill deployment.

Impact✨

Med Companion was shipped millions of users with Google Nest Hub devices, so they could customize their own AI assistant for medication management. It also won the Best Project Award at ASSETS Conference for Computing 2022.

Chapter 8

Learnings from the experience

- MVP solution better than no solution: Even though we designed a holistic solution, we only shipped the minimum viable part due to deadlines. I was nervous as the solution was far from perfect, but to my surprise, even that added value to our users' lives. Prioritizing product features to maximize user value can help break down the ideal vision into achievable goals.

- Successful remote collaboration: Planning became increasingly important due to the lack of opportunities for small conversations. Planning ahead, work sessions on Miro, task trackers all became critical tools for remote alignment. Remote collaboration was new, but we sought ways to succeed.

- Tremendous possibilities of multi-modal AI: Multi-modal AI was a relatively new technology in 2020. However, even at that point, I could realize the immense possibilities with it. I wrote an article sharing my views about the future of AI at that time, predicting that this technology has the potential to percolate almost every sphere of our lives.